Score Matching, Toy Datasets, and JAX

Mon 31 October 2022

Exploring the score matching technique on the ring dataset with JAX

Mon 31 October 2022

Exploring the score matching technique on the ring dataset with JAX

Thu 25 February 2021

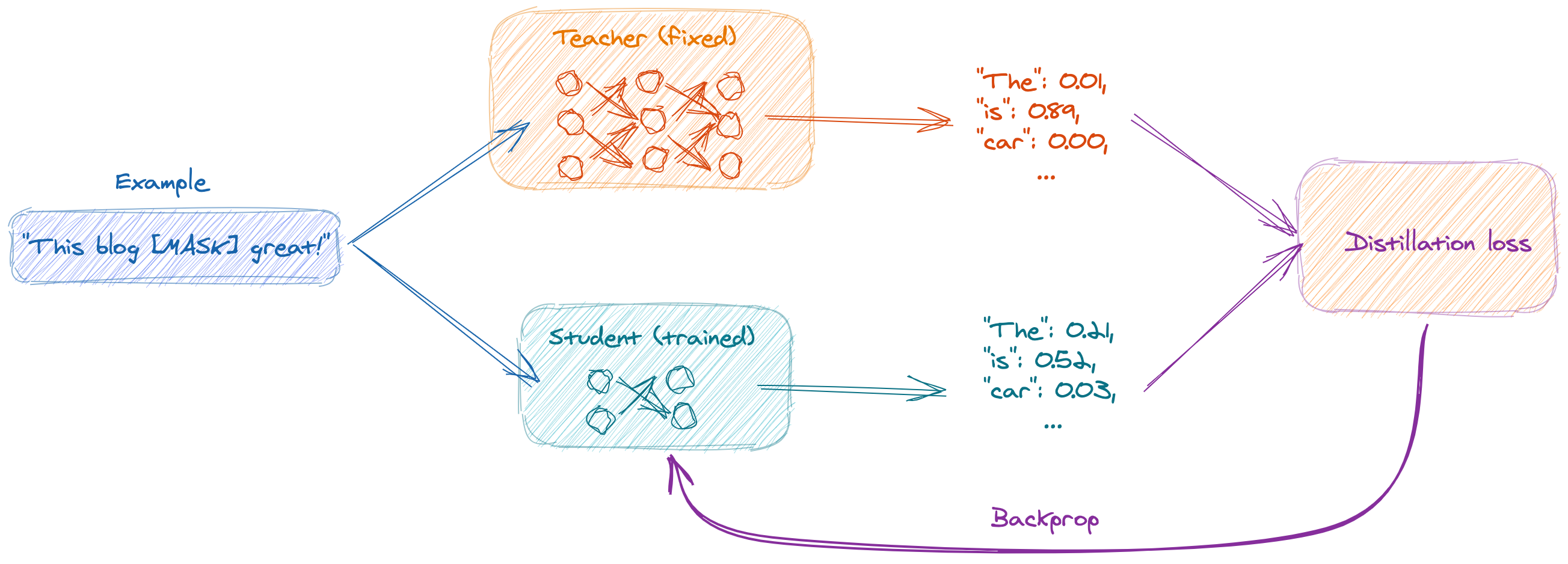

Leaner and faster Transformers with distillation. Fine-tuning BERT-tiny in a couple of minutes with PyTorch.

Fri 29 January 2021

Fine-tuning DistillBERT to tag toxic comments on Wikipedia, using TensorFlow and Hugging Face's transformers library.

Sat 29 February 2020

Exploring the mechanics of the SHAP feature attribution method with toy examples. Presentation of Kernel SHAP.

Fri 27 December 2019

Exploring the mechanics of the SHAP feature attribution method with toy examples. Presentation of Shapley values.

Sun 19 August 2018

Learning multi-class classification with only a handful of of examples per class may seem impossible at first, let's see how we can tackle this problem with textual data.

Tue 12 September 2017

Playing with the LIME machine learning model interpretion part 2: deep learning.

Mon 07 August 2017

Playing with the LIME machine learning model interpretation tool.